Technical SEO is the foundation of a strong online presence. It involves optimizing the technical aspects of your website to make it search engine-friendly.

Search Engine Optimization (SEO) serves as a cornerstone of digital marketing, playing a crucial role in driving organic traffic and enhancing online visibility.

While On-page SEO and content strategies often take center stage, Technical SEO is the unsung hero working behind the scenes to ensure your website is not only accessible but also easily crawlable and indexable by search engines.

It addresses the structural elements of your site, enabling search engines to understand and rank your content effectively.

Whether you’re a complete novice starting from scratch or someone looking to refine their existing knowledge, this comprehensive guide to technical SEO will walk you through the fundamentals and advanced techniques.

By mastering these elements, you can build a robust foundation for search visibility and long-term success in the competitive digital landscape.

Table of Contents

Part 1: Technical SEO Basics

What Is Technical SEO?

Technical SEO refers to the optimization of a website’s infrastructure to improve its crawling, indexing, and ranking in search engine results.

Unlike on-page SEO, which deals with content and keywords, technical SEO ensures that the underlying framework of your site supports efficient communication with search engines.

It focuses on non-content elements such as:

- Website architecture: Ensures logical structure and easy navigation for both users and search engines.

. - Site speed: Enhances loading times, which is a critical ranking factor and a major component of user experience.

. - Mobile responsiveness: Adapts your website’s design and functionality to various screen sizes and devices.

. - Security protocols (e.g., HTTPS): Builds trust and protects user data while boosting SEO rankings.

. - Structured data and schema markup: Helps search engines better understand your content for enhanced search result features.

.

The ultimate goal of Technical SEO is to make your website as user-friendly, accessible, and search-engine-friendly as possible, ensuring it meets modern web standards and improves visibility.

How Complicated Is Technical SEO?

At first glance, technical SEO might seem daunting due to its jargon-heavy nature, reliance on specific tools, and the occasional need for coding knowledge.

Terms like “canonicalization,” “crawling,” and “schema markup” can feel overwhelming for beginners, and the technical aspects may appear inaccessible without a background in web development.

However, mastering the fundamentals is not as complicated as it seems and can be achieved without advanced technical skills. The key is to start small and build confidence over time.

For instance, you can begin with straightforward tasks such as fixing broken links, optimizing your robots.txt file to guide search engines on which pages to crawl, or submitting an XML sitemap to ensure your site is discoverable.

These actions often require minimal effort but can make a significant impact on your site’s search visibility.

Once you’re comfortable with these basics, you can gradually tackle more advanced areas like implementing canonical tags to resolve duplicate content issues or improving Core Web Vitals metrics such as page speed and interactivity.

As an example, consider a website owner noticing that several internal links lead to 404 error pages.

By identifying these broken links using a free tool like Screaming Frog or Ahrefs and updating them to point to the correct URLs, they not only enhance user experience but also ensure search engine crawlers can access important content.

This simple task requires no coding knowledge but provides immediate SEO benefits, laying the groundwork for tackling more technical challenges in the future.

Part 2: Understanding Crawling

Crawling is the process through which search engines, like Google, discover new or updated content on your website. This process involves specialized programs called bots or spiders that systematically browse web pages.

These bots follow links from one page to another, gathering information and assessing the content to determine its relevance, quality, and value for users.

Crawling is the first step in the search engine process, ensuring that new content can be indexed and later retrieved during a search query.

For example, if you publish a blog post with links to related articles on your site, Google’s crawler will follow those links, allowing it to map out your site structure and identify all connected pages.

A well-optimized internal linking strategy helps improve the crawler’s ability to navigate and understand your site effectively.

How Crawling Works

Search engine bots navigate your site by following hyperlinks, much like a human user exploring web pages. They typically begin at a starting point, such as your homepage, and proceed to follow internal links to discover additional pages on your site.

For instance, a bot might start at https://example.com, follow a link to https://example.com/blog, and then move on to https://example.com/blog/article1.

This process helps search engines understand the hierarchy and structure of your website, enabling them to create a map of how different pages connect.

A practical example is an e-commerce website: if the homepage links to category pages like “Men’s Clothing” and “Women’s Clothing,” and these category pages further link to individual product pages, bots will follow these links to index all the product pages.

Ensuring a logical and clear linking structure ensures that bots can efficiently discover all important pages.

Robots.txt

The robots.txt file is a small text file located in the root directory of your website that plays a significant role in controlling how search engine bots crawl your site.

It acts as a set of instructions for search engines, telling them which parts of your site they can or cannot access.

For instance, you might want to restrict bots from crawling certain areas of your website, such as admin sections, test pages, or private user data directories.

This file is particularly helpful in optimizing your site’s crawl budget, which is the number of pages search engines are willing to crawl during a given session.

By blocking unimportant or irrelevant sections, you ensure that bots focus their attention on your high-priority content.

However, misconfiguring your robots.txt file can lead to serious SEO issues, such as inadvertently blocking essential pages from being crawled or indexed.

For example, to allow all bots to access every part of your site, you would use the following robots.txt configuration:

User-agent: *

Disallow:

On the other hand, if you wanted to block search engine bots from crawling a directory named /private-data/ and a specific file named test-page.html, you would use:

User-agent: *

Disallow: /private-data/

Disallow: /test-page.html

It’s important to remember that while robots.txt can guide bots on what to avoid, it doesn’t guarantee security. Sensitive information should always be protected through proper authentication methods rather than relying solely on robots.txt.

Crawl Rate

Crawl rate refers to how often search engines, like Google, visit and scan your website to discover and update its content.

A higher crawl rate ensures that search engines index new or updated pages more quickly, which is particularly crucial for dynamic sites or those that frequently add fresh content.

Several factors can influence your crawl rate:

- Server Performance: A fast, reliable server encourages search engines to crawl more pages since they can do so efficiently without encountering timeouts or errors.

For example, if your server is slow or frequently down, search engines might reduce their crawl rate to avoid wasting resources.

. - Content Updates: Regularly updating or adding content signals to search engines that your site is active and worth frequent revisits.

For instance, a news website publishing daily articles is likely to have a higher crawl rate than a static website with rare updates.

. - Internal Linking Structure: A well-organized internal linking system helps search engines navigate your site more effectively, enabling them to discover and prioritize important pages.

For example, linking from your homepage to a newly published blog post ensures that it gets crawled faster.

.

Google Search Console provides tools to monitor crawl activity and, in some cases, adjust the crawl rate.

For instance, you can use the “Crawl Stats” report to identify patterns in crawling behavior and optimize your site accordingly. If your site can handle higher traffic, you can request a faster crawl rate directly in Search Console.

Access Restrictions

Access restrictions are essential in controlling which parts of your website can be crawled and indexed by search engines.

Restricting access can help prevent unnecessary or irrelevant content from being indexed, which may dilute the overall quality and relevance of your site’s search rankings.

Common access restrictions include:

- Password Protection: Sensitive content, such as internal databases, private user areas, or staging sites, should be restricted from public access. Using a login prompt prevents unauthorized crawlers from indexing these areas.

. - Meta Directives: You can control access by using

noindex,nofollow, or similar directives in the meta tags of your pages. These directives tell search engines not to index a page or follow its links.

For example, the tag<meta name="robots" content="noindex, nofollow">ensures the page is neither indexed nor followed.

. - HTTP Authentication: Websites can be secured by HTTP authentication.

When a website is protected by a username and password, search engine bots cannot crawl or index the pages unless they are provided with the correct credentials.

. - IP Restrictions: Some websites restrict access to content based on the IP address of the visitor.

You can use this method to block search engine bots from crawling specific IP ranges, ensuring that only legitimate traffic can access certain content.

.

By utilizing these access restrictions, you can protect sensitive data, ensure efficient crawling, and ensure that search engines focus on indexing the most important pages.

Example:

Let’s say you have a members-only area on your website where users can log in to view premium content.

You don’t want search engines to index this section of the site, as it’s behind a paywall. In this case, you could include the following meta tag on these pages:

<meta name="robots" content="noindex, nofollow">

This directive ensures that search engines don’t index the content of the members-only area and don’t follow any links from that page, which prevents them from crawling any additional restricted pages.

How to See Crawl Activity

Monitoring crawl activity is essential for understanding how search engines interact with your website.

By reviewing crawl data, you can identify potential issues, optimize crawl efficiency, and make adjustments to ensure that search engines are indexing your important pages.

To see crawl activity, you can use several tools. Google Search Console is one of the most reliable and comprehensive tools for this purpose.

Within Google Search Console, the “Crawl Stats” report shows details about how often Googlebot visits your site, including the number of requests made, the download size, and the time spent crawling.

Additionally, you can find insights into any errors or issues that Google encountered during the crawl process.

Another valuable tool for tracking crawl activity is server log analysis. Every time a bot visits your site, it generates a log entry on your server.

These logs can show you which pages are being accessed, the frequency of visits, and which bots are making those requests.

Analyzing server logs can reveal important insights, like which pages are not being crawled, where crawl budget might be wasted, or if certain bots are encountering errors on your site.

You can also use third-party tools like Screaming Frog which provide crawl activity reports and can give a detailed breakdown of issues such as broken links, redirect loops, or missing meta tags.

These tools simulate the actions of a search engine bot and provide a comprehensive view of your site’s crawlability.

Example:

For example, imagine you notice a drop in your website’s visibility in Google search results. After checking Google Search Console, you find that Googlebot has not been able to access some important pages due to a crawl error.

The report shows 404 errors for these pages, meaning that Googlebot attempted to access URLs that no longer exist.

By fixing these issues (e.g., creating 301 redirects to the correct pages or updating links), you can ensure that your pages are accessible again and improve your website’s search performance.

Crawl Adjustments

You can optimize your crawling by:

- Fixing broken links

- Simplifying URL structures

- Prioritizing high-value pages

- Using XML sitemaps to guide search engines

Part 3: Understanding Indexing

Indexing is the process by which search engines store and organize the information they’ve crawled from websites, making it available and retrievable during a user search.

Once a page is crawled, search engines analyze its content and relevance, categorizing it in their index. This indexed information is then used to generate search results when users query search engines.

Proper indexing is essential for SEO, as pages that aren’t indexed won’t appear in search results, limiting their visibility to potential visitors.

Therefore, ensuring that your website’s pages are efficiently indexed is crucial for maximizing organic traffic and search engine rankings.

Robots Directives

Meta tags, such as the noindex directive, play a crucial role in controlling which pages should or should not be indexed by search engines.

This allows webmasters to prevent certain pages, such as duplicate content, admin pages, or thank-you pages, from appearing in search engine results, thus improving the overall SEO strategy.

For example, if you have a page that you don’t want to be indexed, you can add the following tag within the <head> section of the HTML:

<meta name="robots" content="noindex">This instructs search engines like Google not to index the page in question, but it doesn’t prevent the page from being crawled.

If you want to completely restrict search engine bots from both crawling and indexing a page, you can use the noindex, nofollow directive instead, which also tells search engines not to follow any links on that page.

It’s an effective way to manage SEO and keep unwanted pages from affecting your website’s overall search engine performance.

Canonicalization

Canonical tags play a vital role in helping search engines identify the preferred version of a webpage, thereby preventing issues related to duplicate content.

When multiple URLs lead to the same or similar content, search engines may struggle to determine which version to index and rank. This can result in lower rankings for all versions or cause content to be ignored.

To avoid this, you can implement a canonical tag on pages with duplicate content, directing search engines to the preferred version. Here’s how you can use the canonical tag:

<link rel="canonical" href="https://example.com/preferred-page-url">

By adding this tag in the <head> section of the HTML, you signal to search engines which URL should be considered the authoritative version.

This is particularly helpful for e-commerce sites with product variations, blogs with multiple page versions, or websites with content accessible through multiple URLs.

Properly using canonicalization ensures that link equity and page rank are consolidated on a single page, which can improve overall SEO performance and prevent content duplication from negatively impacting your site’s search engine visibility.

Check Indexing

Use Google Search Console to check which pages are indexed. Navigate to the “Coverage” report to identify:

- Indexed pages

- Excluded pages

- Errors preventing indexing

Reclaim Lost Links

Lost links occur when indexed pages are deleted, moved, or undergo structural changes without implementing proper redirects.

This can result in a decrease in organic traffic and disrupt the user experience, as both users and search engine bots may encounter 404 errors.

To prevent this, it is essential to implement 301 redirects, which permanently redirect both users and search engine bots from the old URL to the new, correct URL.

By ensuring that any deleted or moved pages point to a relevant, active page, you maintain your site’s link equity and preserve its SEO performance.

Additionally, monitor your website regularly for broken or lost links using tools like Google Search Console, which will allow you to quickly address any issues that arise.

Add Internal Links

A strong internal linking structure is fundamental for a well-organized website. Internal links are hyperlinks that connect one page of your website to another, making it easier for both search engines and users to navigate the site.

A well-planned internal linking structure ensures that all important pages are easily discoverable by search engines, which helps in their indexing process.

Furthermore, internal links guide users through your content, improving user experience and increasing the time spent on your site.

Ensure that internal links are contextually relevant and use descriptive anchor text that clearly explains the linked content. Regularly auditing your internal links helps maintain an effective structure and allows you to fix any broken links quickly.

Add Schema Markup

Schema markup, or structured data, is a powerful SEO tool that helps search engines understand the content on your website more clearly.

It uses a specific vocabulary of tags or code added to your web pages, allowing search engines to interpret the context and details of your content.

For example, adding FAQ schema markup can display your FAQ section directly in the search results, making it stand out and potentially increasing click-through rates. Other common schema types include product schema, recipe schema, and article schema.

Implementing schema markup not only improves how search engines read and rank your content but can also lead to enhanced search result features, like rich snippets, which display additional information such as ratings, prices, or event dates, making your site more visible and attractive to users.

Part 4: Technical SEO Quick Wins

Some simple fixes can make a big impact on your site’s SEO performance:

- Compress large images to improve site speed.

- Use caching tools like Cloudflare or WP Rocket.

- Switch to HTTPS for added security.

- Check for and fix broken links.

- Submit XML sitemaps to Google Search Console.

- Optimize your site for mobile devices.

Part 5: Additional Technical SEO Projects

1. Page Experience Signals

Google’s Page Experience update places a significant emphasis on user-centric metrics that impact how users interact with and experience a website.

These metrics include mobile-friendliness, which ensures your site is optimized for mobile devices, and HTTPS, which indicates a secure connection.

Additionally, the update targets intrusive interstitials (such as pop-ups), which can create a disruptive browsing experience for users.

Websites that provide a smooth, fast, and secure user experience are more likely to perform well in search rankings, as Google prioritizes sites that meet these criteria.

2. Core Web Vitals

Core Web Vitals are a set of user-centered metrics that measure real-world experience and performance. Optimizing these metrics is crucial to maintaining a positive user experience:

- Largest Contentful Paint (LCP): This measures how quickly the largest visible element on a page (such as an image or video) loads. A fast LCP is essential for keeping users engaged and reducing bounce rates.

. - First Input Delay (FID): FID gauges how quickly a page becomes interactive after a user attempts to interact with it, such as clicking a button or link. The faster the interaction, the better the user experience.

. - Cumulative Layout Shift (CLS): CLS evaluates the visual stability of a page. Pages that shift unexpectedly (e.g., buttons or content moving around) lead to frustrating user experiences. Ensuring your content loads in a stable, predictable way reduces the likelihood of visual disruptions.

.

3. HTTPS

Migrating to HTTPS is no longer optional for websites; it’s crucial for both security and SEO. HTTPS (Hypertext Transfer Protocol Secure) encrypts the data exchanged between a user’s browser and the website, providing protection against data breaches and unauthorized access.

Google has explicitly stated that HTTPS is a ranking factor, meaning that sites without HTTPS are at a disadvantage in search rankings.

Beyond SEO, HTTPS builds trust with users, signaling that their personal information is being protected during transactions and interactions.

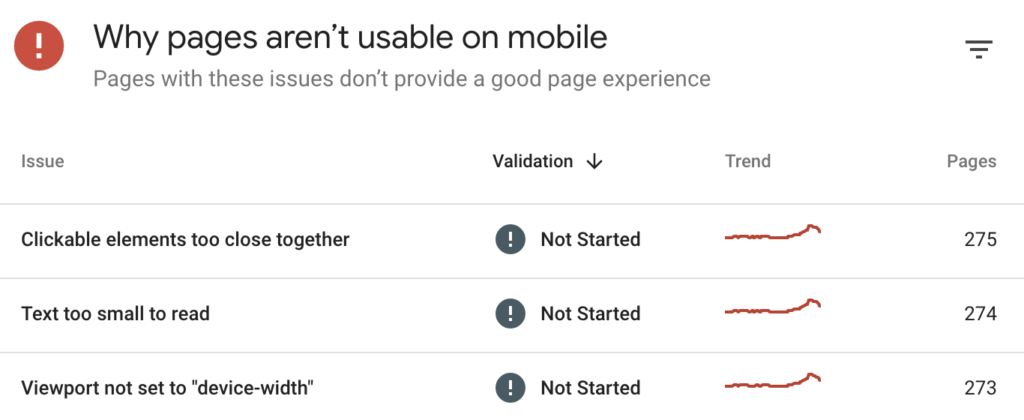

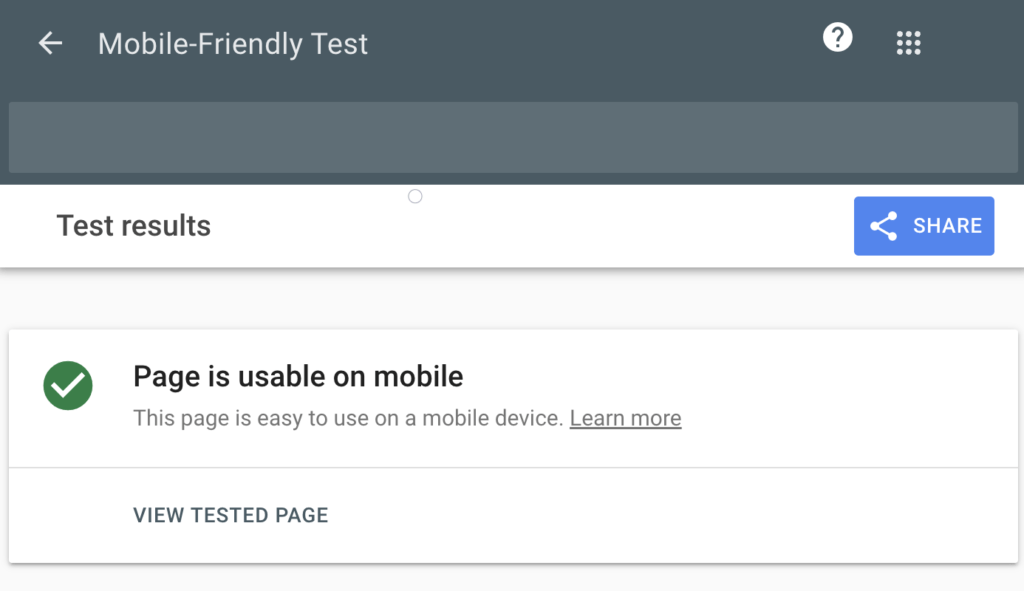

4. Mobile-Friendliness

With mobile traffic surpassing desktop traffic, ensuring your website is mobile-friendly is a critical part of technical SEO.

Responsive web design, which automatically adjusts a site’s layout depending on the device it’s viewed on, is essential for providing a seamless user experience across all devices.

Google also prioritizes mobile-first indexing, meaning it primarily uses the mobile version of a site for ranking and indexing.

To verify your site’s mobile compatibility, use Google’s Mobile-Friendly Test tool, which provides insights and suggestions for improving mobile usability.

5. Interstitials

Interstitials, or intrusive pop-ups that obstruct a user’s view of content, can significantly harm the user experience, especially when they are difficult to dismiss or prevent the user from accessing key content.

Google penalizes sites that use intrusive interstitials that negatively impact user experience. This includes full-page pop-ups or interstitials that appear immediately when a user lands on the page.

To avoid penalties, ensure that any pop-ups or interstitials you use do not obstruct critical content or frustrate users trying to engage with your site.

6. Hreflang — For Multiple Languages

The hreflang attribute is a crucial SEO tool for websites that cater to multiple languages or regions. It helps search engines understand the language and regional targeting of specific web pages.

By properly using the hreflang tag, you can prevent content duplication issues and ensure the right version of a page appears in search results based on a user’s language and location.

For example, if you have a version of your website for both English-speaking users in the United States and the UK, you would use the hreflang tag to differentiate the content for each group, thus improving SEO and user experience.

Here’s an example of how to use the hreflang tag:

<link rel="alternate" hreflang="en-us" href="https://example.com/en-us/">

This tag tells search engines that the page at this URL is specifically for U.S. English speakers. Similarly, for UK-based English content, you would use:

<link rel="alternate" hreflang="en-gb" href="https://example.com/en-gb/">

By implementing the hreflang tag correctly, you enhance your chances of ranking in regional searches and improve user satisfaction by delivering content tailored to their language or location.

7. General Maintenance

Routine website maintenance is an essential part of keeping your website in top shape for both users and search engines.

Regular audits help ensure that your site’s technical aspects are running smoothly, improving both user experience and SEO performance.

Regular checks for issues such as outdated content, broken links, and crawl errors can significantly impact your site’s visibility and effectiveness.

Things to regularly audit for include:

- Outdated content: Ensure that your content remains fresh and relevant, as outdated content can negatively affect your SEO rankings.

. - Broken links: These can harm your site’s crawlability and frustrate users. Regularly test internal and external links to ensure they are working.

. - Crawl errors: Errors that prevent search engines from accessing certain pages should be fixed immediately to avoid hindering your website’s ranking potential.

.

By conducting frequent audits, you can identify potential problems before they escalate, keeping your site running efficiently and SEO-optimized.

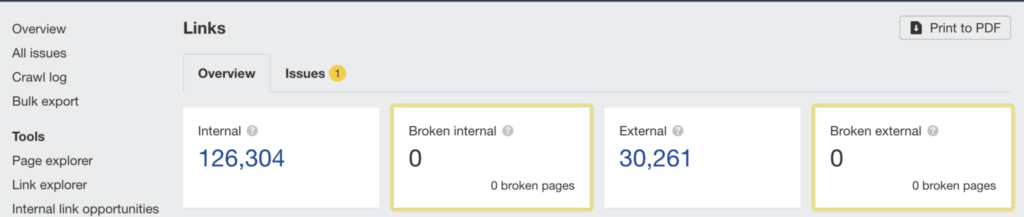

8. Broken Links

Broken links, also known as dead links, occur when a URL no longer works, either due to the target page being removed, moved without a redirect, or a typo in the link.

These broken links can negatively impact the user experience, as visitors may land on 404 error pages or irrelevant content.

Additionally, search engines may penalize sites with a high number of broken links because they suggest poor website management and user experience.

To avoid these issues:

- Fix or redirect broken links: Either update the link to the correct URL or set up a proper 301 redirect to guide both users and search engines to the right page.

. - Regularly check for broken links: Tools like Screaming Frog, and Google Search Console can help you identify broken links.

.

Fixing or redirecting broken links is crucial for maintaining a healthy site, ensuring smooth navigation for users, and preventing SEO penalties.

9. Redirect Chains

Redirect chains occur when a webpage is redirected to another page, which is then redirected to a third one, and so on.

This can significantly reduce page load speeds and waste valuable crawl budget, as search engine bots need to follow each redirection in the chain.

Chains of redirects may also lead to a loss in page authority and cause indexing delays, affecting your rankings.

To resolve this:

- Eliminate redirect chains: Ensure that any redirects go directly to the final destination without unnecessary intermediate steps.

. - Use 301 redirects wisely: If you’re moving content or restructuring your website, make sure you implement direct 301 redirects to ensure the transfer of SEO value.

.

By eliminating redirect chains, you help improve crawl efficiency, speed up load times, and preserve the integrity of your website’s SEO.

Part 6: Technical SEO Tools

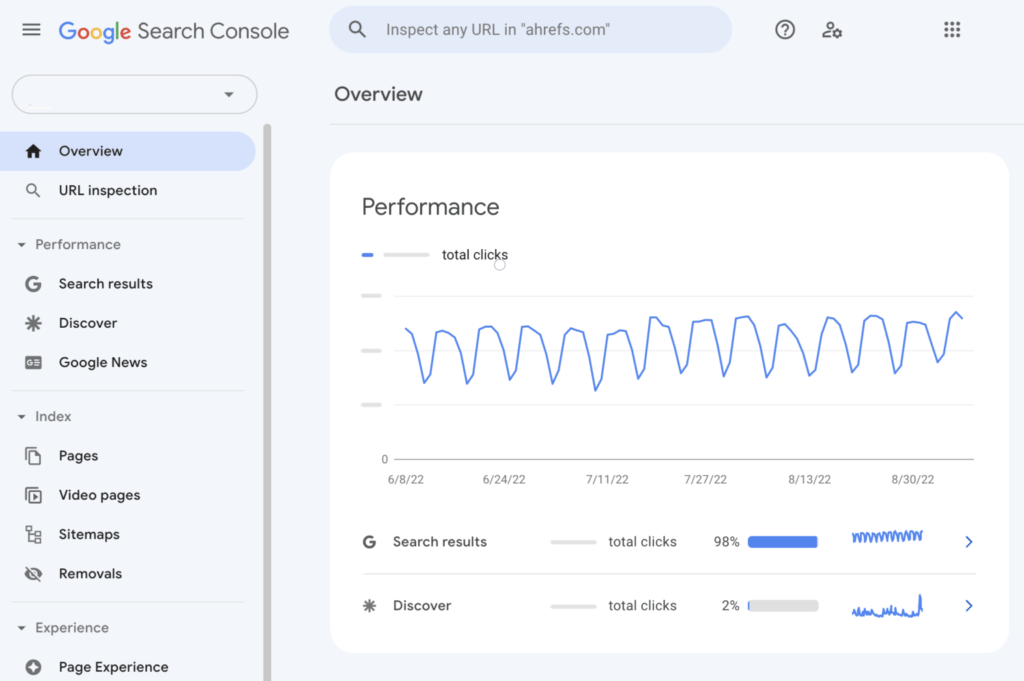

Google Search Console

Google Search Console is a free, powerful tool offered by Google that allows you to monitor the performance of your website in search results.

It helps you track how Googlebot crawls your website, detects indexing issues, and gives insights into your site’s overall health.

Through this tool, you can also view search analytics, identify issues related to mobile usability, and submit sitemaps to enhance your site’s visibility on Google.

Google Search Console is an essential tool for identifying and resolving technical SEO problems and optimizing your site for search engine performance.

Google’s Mobile-Friendly Test

Google’s Mobile-Friendly Test is a tool that evaluates how well your website performs on mobile devices. With mobile-first indexing becoming the standard, ensuring that your site is mobile-friendly is critical for both user experience and SEO.

The test assesses how well your site adapts to mobile screens, checking for factors like text readability, button accessibility, and overall usability.

If your site fails the test, Google provides actionable suggestions to improve mobile usability, which can ultimately lead to better rankings in search results.

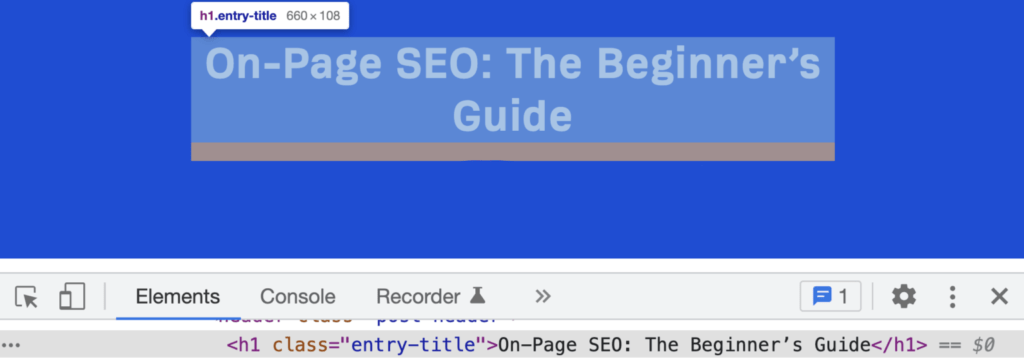

Chrome DevTools

Chrome DevTools is a set of web development tools built directly into the Google Chrome browser.

These tools allow you to inspect your website’s page speed, responsiveness, security, and other technical elements in real time.

With Chrome DevTools, you can troubleshoot performance issues, debug JavaScript, analyze network activity, and evaluate the overall health of your site.

By using this tool, you can pinpoint specific areas of improvement to optimize your website for better user experience, faster load times, and improved search rankings.

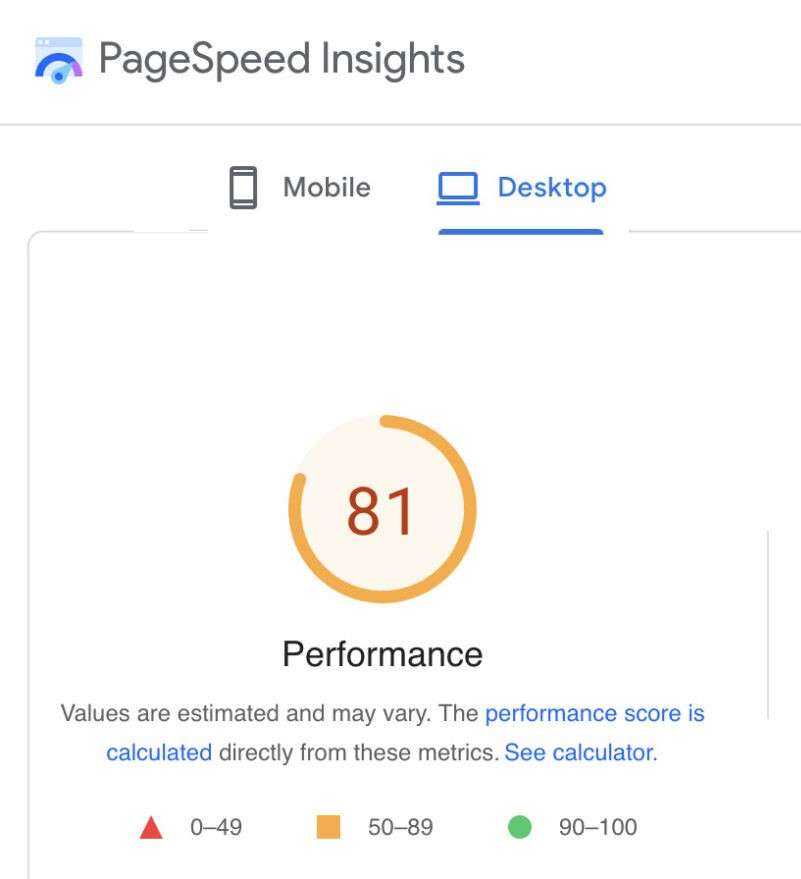

PageSpeed Insights

PageSpeed Insights is a free tool from Google that analyzes your website’s performance, specifically focusing on page load speed. It evaluates both mobile and desktop versions of your site and provides detailed recommendations for improving load times.

These recommendations often include optimizing images, reducing server response times, leveraging browser caching, and improving JavaScript execution.

By addressing these issues, you can enhance your site’s speed, leading to better user experiences, lower bounce rates, and higher rankings in search engine results.